Building a Living Database, Part 3: Software Development

In the final part of the series, we take a deep dive into the technical development of our flagship Digital Holocaust Memory Database – a ‘living’ database-archive of the world’s digital Holocaust memory projects – including the technical trade-offs that needed to be made along the way and how the team established feedback loops for constant improvement.

By James Alvarez, Senior Web Developer, University of Sussex

We began the process by holding a series of workshops, based on the Joint Application Design ethos.

During this Discovery phase, we got everyone involved in the project round the table and started from basic principles: the aims of the project, what success looks like, and what characteristics of users we have.

This ultimately feeds into a document of knowledge about who our users are and what they are trying to do – and outlines this in a very concrete way to ground planning activities.

Starting from these overviews is crucial in software planning. We wanted to tailor our approach to the intended users, and without being fully aware of what users are trying to do, it’s easy to lose track of what to focus the efforts on.

We could then began to share our ideas of what the app would look like and collaborated visually on outlines for the structure of the site and the content of different pages. A series of in-person and asynchronous iterations resulted in a wireframe document, which acted as a key starting point for the software development process.

The challenges of digital Holocaust memory projects

The main challenge is optimising the architecture for long-term maintainability. Software is subject to a constantly-moving tide of obsolescence, as well as trends.

The absolute ideal case is that in 10 years, it should be possible to find a developer who can easily understand and modify this project. To achieve this, we avoid flash-in-the-pan technologies and instead use plain browser tech – CSS and JavaScript (JS) – rather than relying on compilers with trendy variations (Tailwind, React etc).

“Maintainability is a key factor in this project.”

We build from a basic HTML5 version that allows the site to be usable on any browser, even without being JS enabled, and apply the concept of ‘progressive enhancement’, where JS is added to provide additional useful functionality where possible.

Along the way, when making software architectural decisions, we faced an axis of trade-offs, where an expert decision needed to be based on current and future conditions, for example:

FOSS vs build-your-own

Using open-source software saves a huge amount of time, scaffolding the project with tried-and-tested code. With popular frameworks, for example Laravel / Django, maintenance and security updates are handled well by the extensive online communities. There is also the benefit that many other programmers will be familiar with these tools, providing assurance of future maintainability. The danger comes when using packages that are less popular and therefore may become abandoned or develop security issues. Maintainability is a key factor in this project, so we look for projects with multiple contributors that are used extensively in other contexts across the internet – and in many cases, we write plain JS where a suitable library that meets the conditions cannot be found.

New shiny tech vs old but reliable

It’s often tempting to use newer, trendy technologies, as they frequently promise quicker development times and a better developer experience. The downside is that they are often updated so rapidly that your own project can break. Using untrendy but stable tech is often the more reliable approach – and for web tech, PHP is about as ‘untrendy but stable’ as it gets.

Sustainability

A major decision at the beginning was choosing between Django with Python and Laravel with PHP. The differences in development experience are marginal, but we considered that Python with Django could use up to three times more power and memory than Laravel. This does not take into account PHP’s highly mature optimisation libraries, for example opcache, and Laravel’s caching.

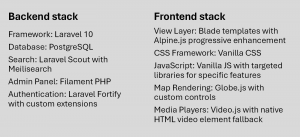

Technical strategy

We managed version control using Git, enabling structured collaboration and traceability throughout the development process.

The admin backend was built using Filament PHP, which allowed for rapid development of data entry interfaces tailored to the structure and workflow of the project. This also ensured consistency across content types and enabled non-technical users to manage data with minimal training.

Deployment is handled through a CI/CD pipeline, allowing code to be automatically deployed to staging or production environments. A staging environment is maintained to test all changes prior to release.

“When making software architectural decisions, we faced an axis of trade-offs.”

We developed a structured user run-through process, where members of the project team manually tested the application after each milestone. These sessions were vital and helped us to verify core functionality, identify bugs, and log issues with screenshots and descriptions.

In this way, we could be confidently create a clear feedback cycle and, importantly, the consistent resolution of problems.

The Living Database launches in January 2026.

Is your digital project missing from our Digital Holocaust Memory Map? If so, please take a moment to complete our survey about your organisation’s digital projects, strategies and infrastructure.

Image credit: Wikimedia Commons, File title: 2h Namur 13, Image description: St Aubin’s Cathedral (Belgium), attribution: I, Luc Viatour, CC BY-SA 3.0, via Wikimedia Commons

Want to know more?

Building a Living Database, Part 1: Mapping the World’s Digital Holocaust Memory Projects

Building a Living Database, Part 2: Gathering and Preparing Content – Landecker Digital Memory Lab